Computer Vision (CV) is one of the most developing branches of Artificial Intelligence today. We have talked about it in various articles, but today we will go into detail on how to build neural network models for recognizing various objects: vehicles, license plates, people, animals, etc.

Understanding the data in CV

Neural models in Deep Learning focus primarily on data. The more data you have, the more accuracy you will achieve with your model. But what is the data made for?

Data = Images+Labels

To make the Computer Vision solution work, we need to make the machine understand the connection between data (image/video) and their respective labels. So, in case we have unlabeled images the computer cannot recognize the objects you want to detect then we need to create the labels manually for every single image in your dataset.

Example:

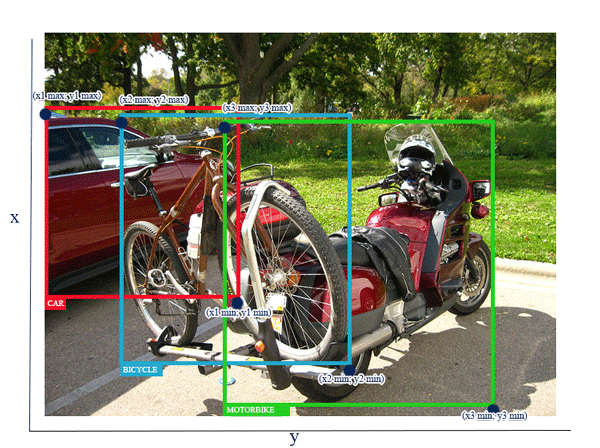

For example, we want the computer to recognize vehicles. First, we must label the image by drawing a bounding box around the object we want to recognize. This is to communicate to the machine the coordinates in which it is located within the complete image. The computer only understands the numerical values, so this passage is necessary to communicate that those pixels, in this position to the corresponding vehicle

Let’s see an image to understand better:

Obviously, after training the model the computer will be able to automatically detect the presence and type of vehicle.

Now that we know the basics, we can proceed with the steps for building the neuronal model.

STEP 1- Data collection

The first step is to collect the data that will serve as a basis for training the neuronal model. This is a crucial phase because gathering the data which meets your requirements is a very hard task. We cannot find all the datasets in open source, instead of creating them from scratch.

Obviously, the type of data to look for will depend on the final goal we want to achieve. The first obstacle we face is that often open-source data is part of mixed collections where not all data is needed. Therefore, we are faced with a “garbage bag” from which we must extract only the data of value for our purpose.

The number of images required for training depends on the type of data a neural network will evaluate. Generally, every characteristic and every grade of that characteristic the neural network must assess requires a set of training images. The more images provided for each category, the more finely the neural network can learn to assess those categories.

STEP 2- EDA (Exploratory Data Analysis)

In this phase, we need to filter the data from the digital garbage bag. Here, we need to sort out all the images and their respective labels present in the dataset. We need to check the missing values in the annotations, we need to perform image augmentations, and split the dataset for training and testing purposes of our model. But before that, we need to keep in mind that the more the number of images in the dataset to train the more the system capacity is required. To train any of the deep learning models with more images, make sure that you have GPU and with the skills necessary to ensure the accuracy of the model.

STEP 3 – Format conversion

The second obstacle we can encounter is the diversity of annotation formats. If we are training our model with open source datasets we will face the problem with their annotation formats. For example, we can have different datasets in various formats like XML, CSV, Excel, TXT, JSON, etc.

So, the challenge lies in filtering the data we need and turning it all into one format.

STEP 4 – Model selection and Training

Once the data set is ready, we move on to the next step: the construction of the model, starting with the search for the most suitable algorithm for the purpose.

We will then modify the algorithm by integrating it with the previously collected data that will be used for the training. Model training involves many characteristics for training the algorithm which may take hours and days for training depending upon how big your dataset is.

Taking advantage of transfer learning or utilizing a pre-trained network and repurposing it for another task, can accelerate this process.

STEP 5 – Neural Model Testing

The last step, but no less important, is to test the model. In this phase, we will verify the efficiency of the model. For the test, we submit to the computer images that are not present in our training dataset, to check that the model does not make mistakes. Separating training and test data ensures a neural network does not accidentally train on data used later for evaluation.

Depending upon the accuracy score the model is producing we can say whether the model we built is working well for our problem or not. It is said that an accuracy score >80% will produce better performance else we need to rebuild the model by fine-tuning the parameters and correcting the mistakes made in annotations or any other factors which are considered that decrease the efficiency of the model.

Pragma Etimos Solutions

Pragma Etimos offers Computer Vision solutions tailored to customer needs. This is because we strongly believe that it is not human work that must adapt to technology, but that it must be designed and optimized for human needs.

License plate detection: entering a license plate number in the search field enables the video analysis background processing function. Should a vehicle with the license plate in question be intercepted, the frame where it was intercepted will be displayed in the interface and an image file with the same information will be written for an accurate subsequent check.

Object detection: You can start a search for objects (vehicles, scooters, animals, etc.). Selecting the object search enables the video analysis background processing function. In the meantime, the software will continue to search for the object in other frames.

People Recognition: it is possible to identify groups of people and individuals in real-time. Should the requested person or group be intercepted, the frame where they were intercepted will be proposed in the interface and will be written to an image file with the same information for an accurate subsequent check.

Face identification: starting from a face, it returns information on a person’s age, gender, and emotion. It is also able to compare two faces and understand whether or not they are the same subject.

MORE TO EXPLORE …

BIOMETRIC IDENTIFICATION: FACE RECOGNITION AND FACE COMPARISON

Biometrics is increasingly used for the identification and authentication of a person through Face Recognition and Face Comparison technologies. Facial biometrics acquires a face from an image or video and transforms it into digital data based on the facial features:…

IMAGE & VIDEO RECOGNITION: AI THAT EXTRACTS VALUE FROM VISUAL DATA

There are more and more Artificial Intelligence solutions that allow machines to understand visual data. In particular, we can define that branch of AI that replicates the functions of the human visual apparatus such as Computer Vision. An example is Image & Video…